WHAT ARE AI COMPUTERS?

Blog

The field of artificial intelligence is one that has been around for a while, though most are acquainted with it through Sci Fi. It is typically described as some sort of robot or machine given human-like sentience and the stories center around all the repercussions of such a scenario. While that may be a possibility in the distant future, the present reality is not quite as dramatic by comparison. But that doesn’t mean that real AI isn’t amazing in its own right! Advances in this field have led to innovations across many different verticals!

What is Artificial Intelligence?

Artificial intelligence, simply put, is the use of computers to approximate the kinds of automatic conclusions a human can make. When we say “conclusions” we are referring to the receipt of information and then making some sort of decision about that information. It doesn’t need to be anything so complex or abstract as a philosophical conclusion. It could be as simple as looking at an apple and deciding that it is red.

With that in mind, we can dream up some applications for AI:

- Defect detection in a factory line

- Speech recognition by a virtual assistant like Siri on a person’s smartphone

- License plate recognition

- Facial recognition

- Customer behavior analysis

These are just some of the applications. You can probably think of a lot more. It’s a far cry from the robots depicted in Science Fiction, but these applications fulfill extremely useful functions in our society that help improve safety, quality of life, statistical analysis, and even prediction based on accumulated data.

Why Artificial Intelligence?

If all that AI does is mimic a human’s decision making powers, then why even bother? Why not just use humans, since we can obviously make these decisions much more easily?

What it really comes down to are the kinds of decisions that we use AI for. Human beings are very good at making guesses based on comparatively little data. We can even make accurate conclusions without being able to easily articulate how we arrived at those conclusions. For example, how would you explain to an alien the difference between a dog and a cat? And if you saw a picture of a Chihuahua and a Husky, you’d instantly know that they are both dogs even though you might have a difficult time explaining why.

If we were to get a computer to make these same conclusions we would need to use Machine Learning, which is an AI method of teaching computers to recognize patterns by looking at lots of examples. Whereas a human could learn after seeing a few examples, a computer would need to look at tens of thousands, or maybe even millions of examples to make accurate conclusions on data.

On the other hand, what computers are good at, is doing a ton of operations, very quickly. And that is primarily what we use AI for. It doesn’t make much sense to have a person on a toll highway trying to make out all the license plate numbers, when a computer and some cameras can easily handle all the traffic.

Whereas a single person would have trouble directing hundreds of autonomous mobile robots in a warehouse for picking products, a computer can route all of this communication rather easily, as long as it has enough hardware to support it.

What is an AI Computer?

And that brings us to the topic of hardware, the physical computer itself. Obviously an AI computer is one which can perform the functions we explained above with as much efficiency as possible. But what we discussed above has a lot to do with software and programming. What are the actual physical differences between an AI computer and a regular computer?

The main difference is in the use of a GPU. That is to say, a graphics processing unit. And yes, that is the same GPU that a person would use in their computer to play a video game or do 3D modelling. Because it just so happens that the kind of math that this processor does that makes it so good at displaying graphics (and that’s all a processor really does, lots of math) also makes it good at AI!

Computer graphics are made up of millions of little dots called pixels. And when a computer makes them appear on a screen, or make changes to them, it needs to do that for ALL of them SIMULTANEOUSLY. And that’s what GPUs are good at. Doing a lot of operations at the same time. The term for this is “parallel processing”. As opposed to “serial processing” which can only do one thing at a time.

One of the reasons a GPU is good at this is because they have a lot of cores. Cores are the little brains in the processor that can do these calculations. GPUs can have THOUSANDS of cores. In comparison to this, regular computer processors only have a few cores.

AI applications benefit greatly from this type of parallel processing. The obvious reason is that, a lot of AI has to do with image processing, such as facial recognition, defect detection and autonomous driving. But instead of displaying an image or video on a screen it works in reverse, by recording an image or video and making conclusions on it. Other non-visual AI applications such as machine learning, also benefits greatly from this type of processing.

AI-Specific Hardware

While general purpose GPUs that you would find at your local computer store are extremely proficient in AI applications, they are still designed primarily for general use. That is to say, they are not application specific, so there is a lot of room for efficiency.

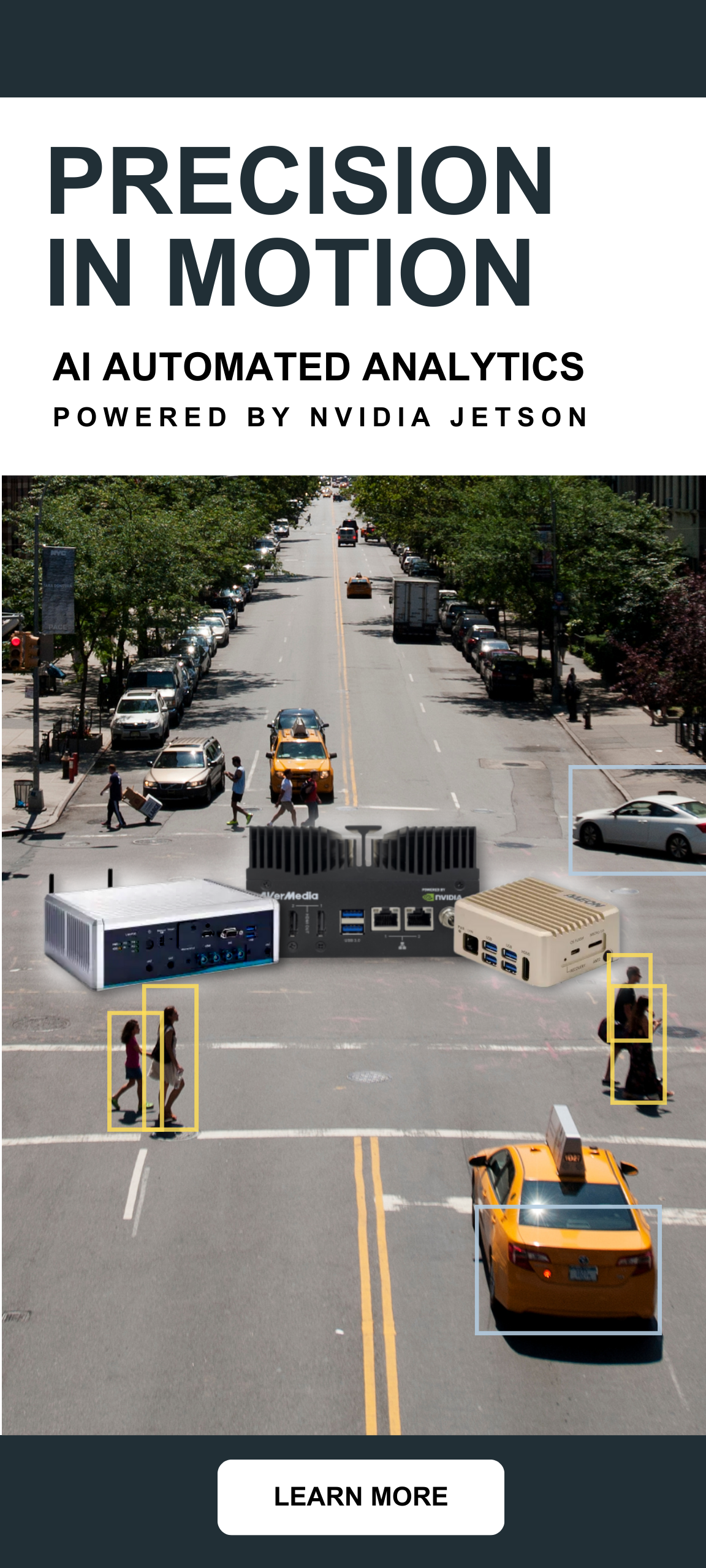

That is why Nvidia has created their line of Jetson computers with integrated GPUs designed specifically for AI. And Intel has released their own line of products called Vision Processing Units (VPUs). While there are differences between these pieces of hardware, they both achieve much greater efficiency by designing their devices specifically for the types of operations that AI applications require. This enables these devices to achieve improvements such as:

- Smaller size

- Lower power usage

- Less heat generation

- Fanless design

- Rugged design

In other words, these computers are designed specifically for deployment in the field for AI purposes as efficiently as possible, and at a much lower price point.